The emergence of AI chatbots, notably Chat GPT, has brought forth various debates on the legitimacy of this tool in the space of academia.

First launched in late 2022, Chat GPT has a generally notorious reputation in academic spaces as it has only made cheating much more accessible and easier. Don’t get it twisted, the concerns AI presents to students are very real and valid.

But how far do these concerns stretch to where the very essence of using AI at all is erroneously demonized?

From what I’ve witnessed, both online and in the real world, there are two camps in the ongoing debate: High-achieving students who generally abhor AI’s use in its entirety, presenting an almost elitist disdain on anyone who does use AI. The latter camp is the larger group of students who are indifferent to the objective issues of AI used in academia. The former is much louder than the latter.

From TikToks or Reels, to real life conversations, mentioning that you’ve used Chat-GPT to help with an assignment, even if it was done responsibly, is bound to warrant criticism. There is a fault in being anti-AI the way that these narratives are, because it rejects an objective reality.

AI can and should be used responsibly, and while what constitutes responsible usage is subjective from person to person, I think a general consensus of what is responsible usage can be agreed upon.

AI as a respectable research tool

The largest benefits AI chat bots present are enhanced and more targeted findings, making research more efficient than it ever was before. The counter to this point may beg the question of what actually constitutes research. Is it the time spent locating the correct sources or the knowledge extracted from them? Personally, I think it’s both.

Traditional or more “strenuous” methods of research before AI are still very much a necessity for any half-decent analysis or deep dive of a given subject, and AI should not replace or even supersede this. But the addition of AI only makes the researching/learning process more efficient and fruitful.

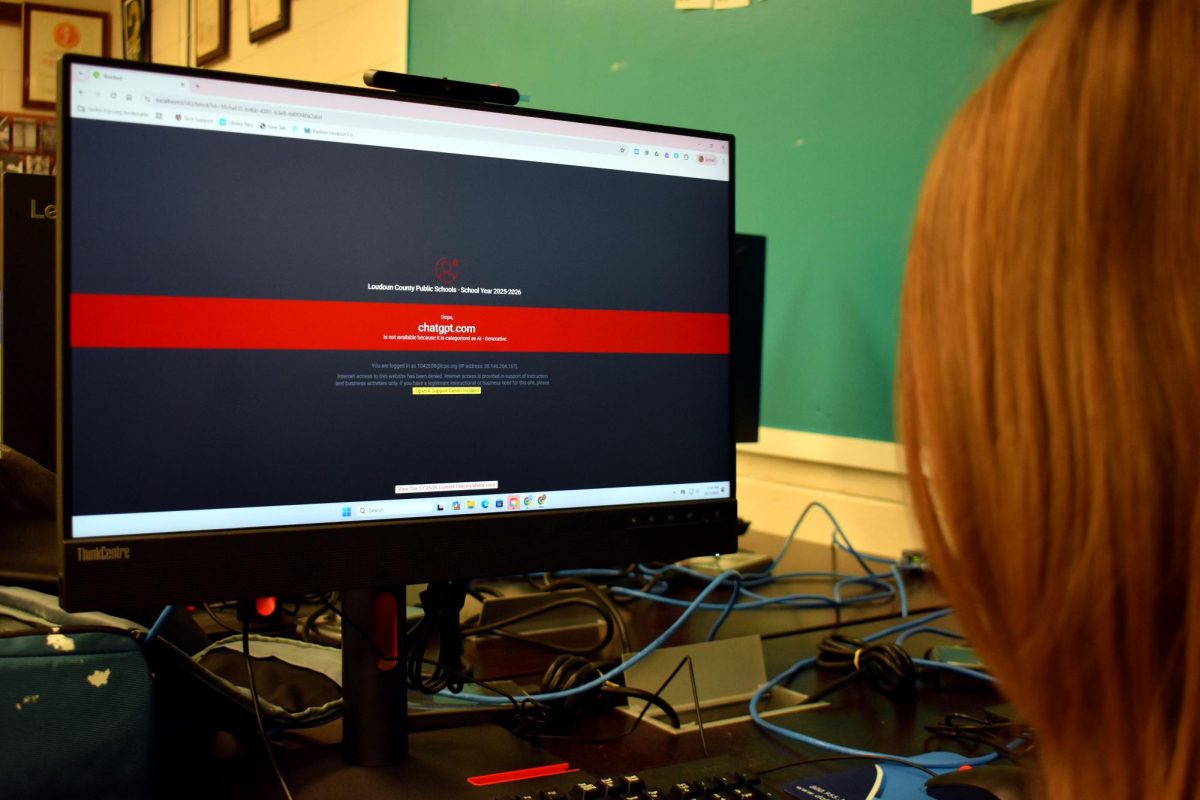

AI exists, and very prevalently so. It will continue to carve out its role in both academia and the workforce. While this isn’t something that I, or any individual, is too stoked about, it’s irrational to discard AI in its entirety despite the benefits it provides as a research tool.

Using a much more targeted search engine in research isn’t cheating, we’ve been using a slightly less efficient google for decades without this same scrutiny.

Additionally, there is a notable difference between relying on AI in its entirety, and using it to strengthen one’s own knowledge and understanding of the subject.

Changing the narrative

Irresponsible usage of AI in academia is a major issue, with students’ not having to write entire essays or reports.

But two truths can exist, it is not the fault of AI in and of itself, but of how we use it.

Using AI shouldn’t and doesn’t mean that one used it to write entire essays or create presentations from scratch. Not all AI usage is dishonorable, but the most common or popular instances seem to be.

This can change, incrementally of course, but it starts by establishing a standard that doesn’t demonize AI entirely, alienating students who use it as a result.